Widely Viewed Content Report Analysis, Q2 2022

By Jeff Allen, Integrity Institute Chief Research Officer and Co-Founder

Outline

Content that violates Facebook’s policies continues to be extremely prevalent among the top content17.5% of the top content violates Facebook’s policies

Misinformation continues to be present among the top links on the platform10% of the top links contain or were spread through misinformation, 5% of overall top content

The majority of the top content on the platform continues to fail basic media literacy checksUnoriginal content from anonymous accounts dominates the top Reels

Methodology and Data

This post is part of our ongoing series to analyze Facebook’s regular Widely Viewed Content Report. For more details on our methodology and access to data, see our Widely Viewed Content Analysis resource. Our tracking dashboard and all data used in the analysis are also publicly available.Overview

Overall, this release of the Widely Viewed Content Report is similar to the previous one released for Q1 of 2022. Violating content remains alarmingly prevalent on the platform. 17.5% of the top content violated Facebook’s Community Standards. Because Facebook now gives transparency around the violation type, we know that most of those were due to their Inauthentic Behavior policy, but intellectual property violations also show up here. Misinfo also makes a return. Two of the top 20 most viewed links on the platform, 10% of links and 5% of overall content, made it onto the lists due to misinformation narratives around them.And finally, 67.5% of the top content fails at least one of our media literacy checks around identity transparency, content production, account networking, and violations. All these numbers are worse, but comparable, to the Q1 2022 report (10% violating, 1.25% misinfo, 63.75% fail media literacy checks).The high prevalence of violating content in the top lists continues to be driven by inauthentic networks which are able to find large success on the platform.A Case Study in Misinformation

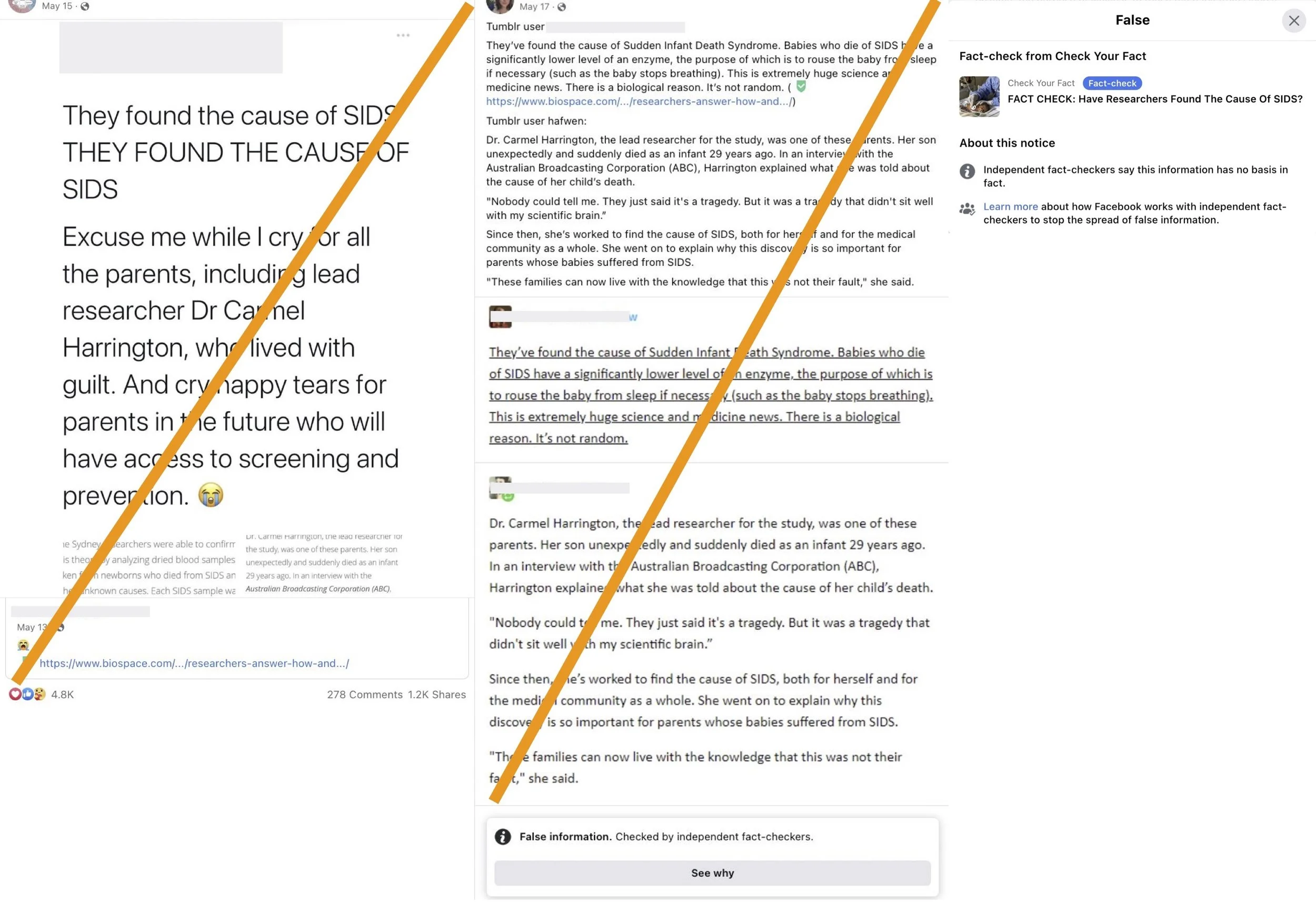

There are two links among the top 20 that we labeled as misinformation. One of them is an interesting case study of how accurate information can become amplified on platforms in inaccurate and misleading ways.The 9th most viewed link was a BioSpace.com writeup summarizing a recent study on sudden infant death syndrome (SIDS). The study “established a link between having low levels of a certain enzyme and Sudden Infant Death Syndrome (SIDS)” (Source). The article itself doesn't actually contain misinformation. However, when the article was shared on Facebook, it primarily spread through a narrative that the researchers in the study had “found the cause of SIDS” (Source). The original headline of the article on BioSpace.com overstated the findings of the study in a way that supported this interpretation, but BioSpace has since updated the headline to be more accurate. This exaggerated claim on the impact of the study was found to be misleading when it was fact checked by Check Your Fact. So while the article didn't contain misinformation, the means through which it reached the 9th most viewed link position was because it was caught up in a misinformation narrative, which is why we are labeling it as misinformation.Check Your Fact referenced a post on Facebook that got over 22,000 shares that has since been removed. But the top sources of engagement on the article that were still up also make reference to the exaggerated claim. Some of which are labeled as misinformation by Facebook, and some of which are not.This is an example that makes clear how misinformation is amplified on platforms in a way that is difficult to stop. An accurate story, with an overstated headline, can be exaggerated and framed in misleading ways when spread online. The exaggerations spread much much faster than the more accurate versions of the story. While the posts with the inaccurate claims received tens of thousands of engagements on Facebook, the post created by BioSpace for the article received 13 total engagements. And even when fact checkers come to clarify the situation, the narrative can show up in new media, new screenshots of tweets, that are extremely difficult for fact checkers uncover without sophisticated media matching tools. Most of the posts on Facebook that made the misleading claim did not have the “False Information” label.The end result is millions of people exposed to health misinformation, and a fraction of that seeing the more accurate information. And in this particular case, it’s hard to point to a villain. Publishers can get headlines wrong, it happens. It’s understandable that people would be excited and eager to share a medical breakthrough on a tragic health issue. The truth takes time to sort out and spread. It’s hard to blame the creator of the content, the sharers of the content, or the fact checkers of the content for this outcome. The true culprit in this case is the design of the platform and algorithms that are distributing content, which exploit weaknesses in content creators, consumers, and validators, to spread misinformation. Algorithms that prioritize predicted engagement above other considerations will always give misinformation, as well as many other types of harmful content, the advantage over truth in the information ecosystem.Alternative ranking methods are plentiful and working well for other platforms. PageRank, the foundational algorithm of Google Search, is so old it is out of patent, but it is still remarkably effective. Our social-aware PageRank calculation continues to do an extremely good job of separating content that passes media literacy checks from the content that fails. When the top content on Facebook comes from a source with a high PageRank score, it is much much more likely to be original, from an actor that is transparent about who they are and what they are doing, and not contain misinformation or other harmful content. The low PageRank score content contains almost all of the low quality, inauthentic content that is posted more for the benefit of the poster than audiences on Facebook.Unoriginal Content Continues to Dominate Reels

Video continues to be the dominant media type, which is no surprise given the focus on Reels and recommended content. The most viewed videos on Facebook continue to be unoriginal. They are taken from other platforms and posted on Facebook by anonymous accounts.60% of the top posts overall were unoriginal, including the one post which was removed for an intellectual property violation. But that number jumps to 77% when you just look at videos. Ten of the 13 videos were taken from other platforms, including Twitter, Imgur, TikTok, and Reddit. People creating original Reels on Facebook and Instagram are still struggling to break into the most viewed content on the platform.We Need Longer Lists and More Countries

It’s not an exaggeration to say that the US gets the most attention when it comes to integrity issues on the platforms. And the situation that the top content lists paint in the US is deeply troubling. 10% of the top links contain misinformation narratives. 17.5% of the top content comes from inauthentic networks. The correct answer for both of these numbers is 0%.The problem is not that inauthentic networks and misinformation are present on Facebook. Spammers are gonna spam, content farmers and gonna farm. The most successful efforts to remove spam and inauthentic networks will still only be 90-99% successful. The question is what happens to that 1-10% of spam that manages to slip through the cracks? These lists in the WVC Reports make it clear: Facebook’s distribution systems and algorithms amplify them to be among the most viewed content on the platform. This situation makes integrity work essentially impossible on the platform.And if this is what it looks like in the US, the country that gets the most attention, then internationally the situation is presumably grim. It is imperative that Facebook increase the size of the lists beyond the top 20 to a number that is more statistically significant and more representative of the true prevalence on the platform. And it is imperative that Facebook release these lists for all countries. Everyone has a right to know if this is what the online information ecosystem looks like in their home country.