What Does the Trust & Safety Team at OpenAI do?

Has this ever happened to you? Someone, perhaps a fairly prominent so-called public thinker, will say something like “nobody is talking about XYZ problem”. And then you shake your head, maybe a little angry or frustrated, because your literal job is to not just think about XYZ problem, but also you have solutions! You think about this space in a much deeper way than this person, who is just casually ignoring your life and work.

Imagine how the OpenAI safety team feels.

As integrity workers, we’re not unfamiliar with seeing people making sweeping arguments about what we do (based on assumptions that are pretty disconnected from our reality). Part of why Integrity Institute exists is to change that dynamic. We wanted to speak to those people – journalists, academics, policymakers, the public, and even our coworkers – directly. While started with the shorthand that we cover “social media”, of course integrity teams cover many other different kinds of products — games, literal 2-sided marketplaces, dating apps. And now, AI.

So! Pop quiz: what do GPT-4 (and other new AI tools) mean for humanity? I bet you have some off-the-cuff, sweeping ideas, right? I know I do.

Imagine how the OpenAI safety team feels.

Better yet – don’t imagine. Because we asked them.

Trust in Tech, the member-led podcast at Integrity Institute, brought on Dave Willner, head of T&S at OpenAI, and Todor Markov, applied safety researcher at OpenAI, to talk through all that. They also graciously allowed me to join Alice Hunsberger as a guest host and pepper them with questions and reactions.

This is a good one. You can listen here.

If you’re anything like me, there’s a ton of questions you might have for them. Such as: what does their job actually look like? How similar or different is the work to “classical” trust and safety work? What might “classical” and “AI” integrity work learn from each other? What do new powerful AI tools mean for integrity work writ large? Are integrity teams about to be swamped with incoming bad stuff?

But, before all that, I want to know: what is it like? What’s on their minds? What do they think of how the world is talking about what they do?

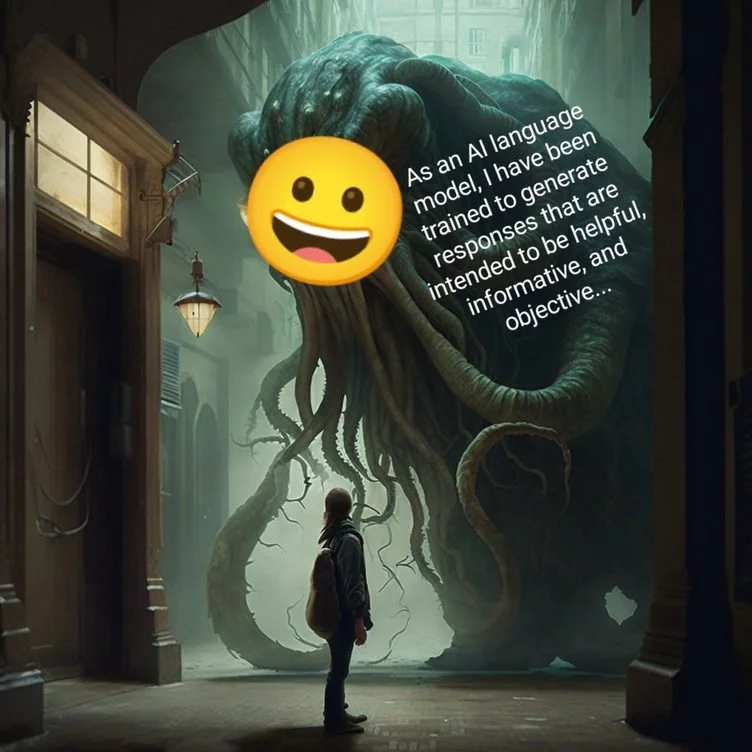

The podcast episode covers all that. From the actual specifics to the big ideas. Including going over this lovely meme:

One big thing that I took away is that these people are indeed integrity workers! Their jobs involve things like: Tradeoffs. Ensuring quality. Keeping an eye on how what they build affects literal text that people say, but also realizing that honestly the better way to conceptualize the problem is that it’s about bad behavior and bad actors. Thinking and designing around adversarial behavior of users.

They do think about big picture questions, like how do we affix the smiley face mask on the shoggoth? But also they need to do small picture work: “literally we are launching DALL-E today, I need to content moderate it right now so it doesn’t show penises.” And everything in between.

Podcast link is here! Click me!

Another thing I took away is that they’re not the only integrity workers at OpenAI. “AI Alignment” (aka making the AI less of an unspeakable eldritch horror, more like a friendly dolphin) is also integrity work – it’s about structure, tradeoffs, incentives, and so on.

I’m really pleased with this episode. Todor and Dave are witty, fun, and wide-ranging. Alice is a great host (and did a wonderful job editing). Talha Baig, the producer in the background, did a lot of work getting us prepped. But beyond the personal idiosyncrasies and talents of the people involved – this is exactly where we want to be: talking to the integrity workers on the ground about big issues that touch on their day-to-day lives. Giving them the spotlight to share their practitioner perspective on big issues. And doing it with a smile and hopefully referencing dank memes.

Thanks, I hope you like it.

Sahar Massachi, Executive Director and cofounder

PS. Alice is equally thrilled to share this episode with the world! Check out her thoughts on the questions we covered during the episode and their implications for integrity work.